NVIDIA InfiniBand Products

InfiniBand Switches

Looking for InfiniBand Switches? Here you will find the right IB models for every requirementInfiniBand Adapters

We offer you the right IB network adapters up to 400 Gb/sCables, Modules & Transceivers

For suitable IB and GbE cables, modules or trancivers, please visit the corresponding subpage.You are looking for Gigabit Ethernet solutions? Then check out our selection of Nvidia GbE Switches and Adapters.

InfiniBand Switch Systems

The NVIDIA's family of InfiniBand switches deliver the highest performance and port density with complete fabric management solutions to enable compute clusters and converged data centers to operate at any scale while reducing operational costs and infrastructure complexity. Nvidia switches includes a broad portfolio of Edge and Director switches supporting up to 800Gb/s port speeds and ranging from 8 to 800 ports.

Quantum-X800 Switches

NVIDIA Quantum-X800 InfiniBand switches deliver 800 gigabits per second (Gb/s) of throughput, ultra-low latency, advanced NVIDIA In-Network Computing, and features that elevate overall application performance within high-performance computing (HPC) and AI data centers.

NEW! NEW! |  |  |  |  |

|---|---|---|---|---|

| Quantum-X800 | Q3200-RA | Q3400-RA | Q3401-RD | Q3450-LD |

| Rack mount | 2U | 4U | 4U | 4U |

| Ports | 36 | 144 | 144 | 144 |

| Speed | 800Gb/s | 800Gb/s | 800Gb/s | 800Gb/s |

| Performance | Two switches each of 28.8Tb/s throughput | 115.2Tb/s throughput | 115.2Tb/s throughput | 115.2Tb/s throughput |

| Switch radix | Two switches of 36 800Gb/s non-blocking ports | 144 800Gb/s non-blocking ports | 144 800Gb/s non-blocking ports | 144 800Gb/s non-blocking ports |

| Connectors and cabling | Two groups of 18 OSFP connectors | 72 OSFP connectors | 72 OSFP connectors | 144 MPO connectors |

| Management ports | Separate OSFP 400Gb/s InfiniBand in-band management port (UFM) | Separate OSFP 400Gb/s InfiniBand in-band management port (UFM) | Separate OSFP 400Gb/s InfiniBand in-band management port (UFM) | Separate OSFP 400Gb/s InfiniBand in-band management port (UFM) |

| Connectivity | Pluggable | Pluggable | Pluggable | MPO12 (Optics-only) |

| CPU | Intel CFL 4 Cores i3-8100H 3GHz | Intel CFL 4 Cores i3-8100H 3GHz | Intel CFL 4 Cores i3-8100H 3GHz | Intel CFL 4 cores i3-8100H 3GHz |

| Security | CPU/CPLD/Switch IC based on IRoT | CPU/CPLD/Switch IC based on IRoT | CPLD/Switch IC based on IRoT | CPU/CPLD/Switch IC based on IRoT |

| Software | NVOS | NVOS | NVOS | NVOS |

| Cooling mechanism | Air cooled | Air cooled | Air cooled | >Liquid cooled (85%) >Air cooled (15%) |

| EMC (emissions) | CE, FCC, VCCI, ICES, and RCM | CE, FCC, VCCI, ICES, and RCM | CE, FCC, VCCI, ICES, and RCM | CE, FCC, VCCI, ICES, and RCM |

| Product safety compliant/certified | RoHS, CB, cTUVus, CE, and CU | RoHS, CB, cTUVus, CE, and CU | RoHS, CB, cTUVus, CE, and CU | RoHS, CB, cTUVus, CE, and CU |

| Power Feed | 200-240V AC | 200-240V AC | 48-54V DC | 48V DC |

| Warranty | One year | One year | One year | One year |

Edge Switches

The Edge family of switch systems provide the highest-performing fabric solutions in a 1U form factor by delivering up to 51Tb/s of non-blocking bandwidth with the lowest port-to-port latency. These edge switches are an ideal choice for top-of-rack leaf connectivity or for building small to medium sized clusters. The edge switches, offered as externally managed or as managed switches, are designed to build the most efficient switch fabrics through the use of advanced InfiniBand switching technologies such as Adaptive Routing, Congestion Control and Quality of Service.

|  |  |

|---|---|---|

| NVIDIA SWITCH-IB 2 SERIES | MSB7880 | MSB7890 |

| Type | Router | Switch |

| SHARP | SHARP v1 | SHARP v1 |

| Ports | 36 | 36 |

| Height | 1U | 1U |

| Switching Capacity | 7.2Tb/s | 7.2Tb/s |

| Link Speed | 100Gb/s | 100Gb/s |

| Interface Type | QSFP28 | QSFP28 |

| Management | Yes (internally) | No (only externally) |

| Management Ports | 1x RJ45, 1x RS232, 1x USB | 1x RJ45 |

| Power | 1+1 redundant and hot-swappable, 80 Gold+ and ENERGY STAR certified | |

| System Memory | 4GB RAM DDR3 | |

| Storage | 16GB SSD | |

| Cooling | Front-to-rear or rear-to-front (hot-swappable fan unit) | |

|  |  |  |  |

|---|---|---|---|---|

| NVIDIA QUANTUM SERIES | MQM8700 | MQM8790 | MQM9700 | MQM9790 |

| Series | Quantum | Quantum | Quantum-2 | Quantum-2 |

| SHARP | SHARP v2 | SHARP v2 | SHARP v3 | SHARP v3 |

| Ports | 40 | 40 | 64 | 64 |

| Height | 1U | 1U | 1U | 1U |

| Switching Capacity | 16Tb/s | 16Tb/s | 51.2Tb/s | 51.2Tb/s |

| Link Speed | 200Gb/s | 200Gb/s | 400Gb/s | 400Gb/s |

| Interface Type | QSFP56 | QSFP56 | QSFP | QSFP |

| Management | Yes (internally) | No (only externally) | Yes (internally) | No (only externally) |

| Management Ports | 1x RJ45, 1x RS232, 1x micro USB | 1x USB3.0, 1x USB for I2C, 1x RJ45, 1x RJ45(UART) | ||

| Power | 1+1 redundant and hot-swappable, 80 Gold+ and ENERGY STAR certified | |||

| System Memory | Single 8GB | Single 8GB DDR4 SO-DIMM | ||

| Storage | --- | M.2 SSD SATA 16GB 2242 FF | ||

| Cooling | Front-to-rear or rear-to-front (hot-swappable fan unit) | |||

Modular Switches

Nvidia’s director modular switches provide the highest density switching solution, scaling from 8.64Tb/s up to 320Tb/s of bandwidth in a single enclosure, with low-latency and the highest per port speeds of up to 200Gb/s. Its smart design provides unprecedented levels of performance and makes it easy to build clusters that can scale out to thousands-of-nodes. The InfiniBand modular switches deliver director-class availability required for mission-critical application environments. The leaf, spine blades and management modules, as well as the power supplies and fan units, are all hot-swappable to help eliminate down time.

|  |  |  |  |

|---|---|---|---|---|

| managed 324 - 800 ports | CS7520 | CS7510 | CS7500 | CS8500 |

| Ports | 216 | 324 | 648 | 324 |

| Height | 12U | 16U | 28U | 29U |

| Switching Capacity | 43.2Tb/s | 64.8Tb/s | 130Tb/s | 320Tb/s |

| Link Speed | 100Gb/s | 100Gb/s | 100Gb/s | 200Gb/s |

| Interface Type | QSFP28 | QSFP28 | QSFP28 | QSFP28 |

| Management | 2048 nodes | 2048 nodes | 2048 nodes | 2048 nodes |

| Management HA | Yes | Yes | Yes | Yes |

| Console Cables | Yes | Yes | Yes | Yes |

| Spine Modules | 6 | 9 | 18 | 20 |

| Leaf Modules (max.) | 6 | 9 | 18 | 20 |

| PSU Redundancy | Yes (N+N) | Yes (N+N) | Yes (N+N) | Yes (N+N) |

| Fan Redundancy | Yes | Yes | Yes | Water cooled |

Benefits Nvidia Switch Systems

- Built with Nvidia's 4th and 5th generation InfiniScale® and SwitchX™ switch silicon

- Industry-leading energy efficiency, density, and cost saving switches company

- Ultra low latency

- Real-Time Scalable Network Telemetry

- Scalability and subnet isolation using InfiniBand routing and InfiniBand to Ethernet gateway capabilities

- Granular QoS for Cluster, LAN and SAN traffic

- Quick and easy setup and management

- Maximizes performance by removing fabric congestions

- Fabric Management for cluster and converged I/O applications

InfiniBand VPI Host-Channel Adapters

Nvidia continues to lead the way in providing InfiniBand Host Channel Adapters (HCA) - the most powerful interconnect solution for enterprise data centers, Web 2.0, cloud computing, high performance computing and embedded environments.

ConnectX-7 SmartNIC Adapter Cards

Providing up to four ports of connectivity and 400Gb/s of throughput, the NVIDIA ConnectX-7 SmartNIC provides hardware-accelerated networking, storage, security, and manageability services at data center scale for cloud, telecommunications, AI, and enterprise workloads. ConnectX-7 empowers agile and high-performance networking solutions with features such as Accelerated Switching and Packet Processing (ASAP2), advanced RoCE, GPUDirect Storage, and in-line hardware acceleration for Transport Layer Security (TLS), IP Security (IPsec), and MAC Security (MACsec) encryption and decryption.

|  |  |  |  |  |  |

|---|---|---|---|---|---|---|

| ConnectX-7 | MCX75310AAS-HEAT | MCX75310AAS-NEAT | MCX753436MC-HEAB MCX753436MS-HEAB MCX755106AC-HEAT MCX755106AS-HEAT | MCX715105AS-WEAT | MCX755106AS-HEAT MCX755106AC-HEAT | MCX75310AAC-NEAT MCX75343AMC-NEAC MCX75343AMS-NEAC |

| ASIC & PCI Dev ID | ConnectX®-7 | |||||

| Speed | 200GbE / NDR200 | 400GbE / NDR | 200GbE / NDR200 | 400GbE / NDR | 200GbE / NDR200 | 400GbE / NDR |

| Technology | VPI*3 | VPI*2 | VPI | VPI | VPI | VPI |

| Ports | 1 | 1 | 2 | 1 | 2 | 1 |

| Connectors | QSFP | QSFP | QSFP112 | OSFP112 | OSFP112 | OSFP |

| PCIe | PCIe 5.0 x16 | PCIe 5.0 x16 | PCIe 5.0 x16 | PCIe 5.0 x16 | PCIe 5.0 x16 | PCIe 5.0 x16 |

| Secure Boot | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Crypto | - | - | (✔)*1 | - | (✔)*1 | (✔)*1 |

| Form Factor | HHHL | HHHL | MCX753436: SFF MCX755106: HHHL | HHHL | HHHL | MCX75310: HHHL MCX75343: SFF |

|  |  |  |  |  |

|---|---|---|---|---|---|

| ConnectX-7 | MCX713104AC-ADAT MCX713104AS-ADAT | MCX713114TC-GEAT | MCX75510AAS-HEAT | MCX753436MC-HEAB MCX753436MS-HEAB | MCX75343AMC-NEAC MCX75343AMS-NEAC |

| ASIC & PCI Dev ID | ConnectX®-7 | ||||

| Speed | 50/25GbE | 50/25GbE | NDR200 | 200GbE / NDR200/HDR | 400GbE / NDR |

| Technology | Ethernet | Ethernet | IB | VPI | VPI |

| Ports | 4 | 4 | 1 | 2 | 1 |

| Connectors | SFP56 | SFP56 | OSFP | QSFP112 | OSFP |

| PCIe | PCIe 4.0 x16 | PCIe 4.0 x16 | PCIe 5.0 x16 | PCIe 5.0 x16 | PCIe 5.0 x16 |

| Secure Boot | ✔ | ✔ | ✔ | ✔ | ✔ |

| Crypto | (✔)*1 | ✔ | - | (✔)*1 | (✔)*1 |

| Form Factor | HHHL | FHHL | HHHL | SFF | TSFF |

HHHL (Tall Bracket) = 6.6" x 2.71" (167.65mm x 68.90mm); FHHL = 4.53" x 6.6" (115.15mm x 167.65mm); TSFF = Tall Small Form Factor; OCP 3.0 SSF (Thumbscrew Bracket) = 4.52" x 2.99" (115.00mm x 76.00mm)

*2The MCX75310AAS-NEAT card supports InfiniBand and Ethernet protocols from hardware version AA and higher.

*3The MCX75310AAS-HEAT card supports InfiniBand and Ethernet protocols from hardware version A7 and higher.

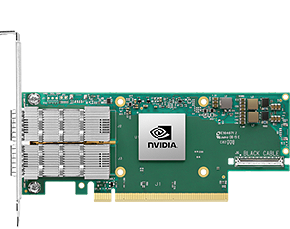

ConnectX-6 VPI Cards

ConnectX-6 with Virtual Protocol Interconnect® (VPI) supports two ports of 200Gb/s InfiniBand and Ethernet connectivity, sub-600 nanosecond latency, and 200 million messages per second, providing the highest performance and most flexible solution for the most demanding applications and markets. Delivering one of the highest throughput and message rate in the industry with 200Gb/s HDR InfiniBand, 100Gb/s HDR100 InfiniBand and 200Gb/s Ethernet speeds it is the perfect product to lead HPC data centers toward Exascale levels of performance and scalability. Supported speeds are HDR, HDR100, EDR, FDR, QDR, DDR and SDR InfiniBand as well as 200, 100, 50, 40, 25, and 10Gb/s Ethernet. All card speeds are backwards compatible.

|  |  |  |

|---|---|---|---|

| ConnectX-6 | MCX653436A-HDAB*1 | MCX653105A-HDAT*1 | MCX653106A-HDAT*1 |

| ASIC & PCI Dev ID | ConnectX®-6 | ||

| Technology | VPI | VPI | VPI |

| Speed | HDR/200GbE | HDR/200GbE | HDR/200GbE |

| Ports | 2 | 1 | 2 |

| Connectors | QSFP56 | QSFP56 | QSFP56 |

| PCI | PCIe 4.0 | PCIe 4.0 | PCIe 4.0 |

| Lanes | x16 | x16 | x16 |

| Crypto | - | - | - |

| Form Factor | PCIe Standup. w/o Bracket: 167.65mm x 8.90mm. Tall bracket | ||

|  |  |  |

|---|---|---|---|

| ConnectX-6 | MCX651105A-EDAT | MCX653105A-ECAT | MCX653106A-ECAT |

| ASIC & PCI Dev ID | ConnectX®-6 | ||

| Technology | VPI | VPI | VPI |

| Speed | HDR100/EDR IB/100GbE | HDR100/EDR IB/100GbE | HDR100/EDR IB/100GbE |

| Ports | 1 | 1 | 2 |

| Connectors | QSFP56 | QSFP56 | QSFP56 |

| PCI | PCIe 4.0 | PCIe 3.0/4.0 | PCIe 3.0/4.0 |

| Lanes | x8 | x16 | x16 |

| Crypto | - | - | - |

| Form Factor | PCIe Standup. w/o Bracket: 167.65mm x 8.90mm. Tall bracket | ||

The Strengths of Mellaonox VPI Host-Channel Adapters

The benefits

- World-class cluster performance

- High-performance networking and storage access

- Efficient use of compute resources

- Cutting-edge performance in virtualized overlay networks (VXLAN and NVGRE)

- Increased VM per server ratio

- Guaranteed bandwidth and low-latency services

- Reliable transport

- Efficient I/O consolidation, lowering data center costs and complexity

- Scalability to tens-of-thousands of nodes

Target Applications

- High-performance parallelized computing

- Data center virtualization

- Public and private clouds

- Large scale Web 2.0 and data analysis applications

- Clustered database applications, parallel RDBMS queries, high-throughput data warehousing

- Latency sensitive applications such as financial analysis and trading

- Performance storage applications such as backup, restore, mirroring, etc.

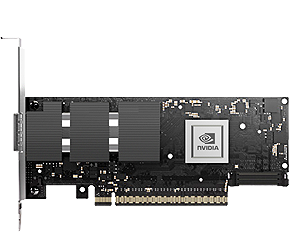

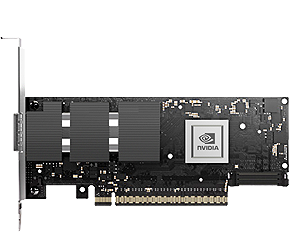

NVIDIA DPU (Data Processing Unit)

The NVIDIA® BlueField® Data Processing Unit (DPU) is a system on a chip, a hardware accelerator specialized for complex tasks like fast data processing and data-centric computing. This is primarily intended to relieve the CPU of network and communication tasks and thus save CPU resources by outsourcing and taking over application-supporting tasks such as data transfer, data reduction, data security and analyses. The use of a DPU is particularly suitable for all workloads with supercomputing tasks such as AI, cloud and big data. A dedicated operating system on the chip can be combined with the primary operating system and offers functions such as encryption, erasure coding and compression or decompression.

|  |  |  |  |  |

|---|---|---|---|---|---|

| BlueField®-2 IB DPUs | MBF2M345A | MBF2H516A | MBF2H516C | MBF2M516A | MBF2M516C |

| Series / Core Speed | E-Series / 2.0GHz | P-Series / 2.75GHz | E-Series / 2.0GHz | ||

| Form Factor | Half-Height Half-Length (HHHL) | Full-Height Half-Length (FHHL) | |||

| Ports | 1x QSFP56 | 2x QSFP56 | 2x QSFP56 | 2x QSFP56 | 2x QSFP56 |

| Speed | 200GbE / HDR | 100GbE / EDR / HDR100 | |||

| PCI | PCIe 4.0 x16 | ||||

| On-board DDR | 16GB | ||||

| On-board eMMC | 64GB | 64GB | 128GB | 64GB | 128GB |

| Secure Boot | ✓ | - | ✓ | ✓ (*1) | ✓ |

| Crypto | ✓ (*1) | ✓ (*1) | ✓ (*1) | ✓ (*1) | ✓ (*1) |

| 1GbE OOB | ✓ | ✓ | ✓ | ✓ | ✓ |

| Integrated BMC | - | - | ✓ | ✓ | ✓ |

| External Power | - | ✓ | ✓ | - | - |

| PPS IN/OUT | - | - | - | ✓ | ✓ |

| BlueField® IB DPUs (first Gen.) | ||

|---|---|---|

| Model Name | Description | Crypto |

| MBF1L516A-ESCAT | BlueField® DPU VPI EDR IB (100Gb/s) and 100GbE, Dual-Port QSFP28, PCIe Gen4.0 x16, BlueField® G-Series 16 cores, 16GB on-board DDR, FHHL, Single Slot, Tall Bracket | ✓ |

| MBF1L516A-ESNAT | BlueField® DPU VPI EDR IB (100Gb/s) and 100GbE, Dual-Port QSFP28, PCIe Gen4.0 x16, BlueField® G-Series 16 cores, 16GB on-board DDR, FHHL, Single Slot, Tall Bracket | - |